Processing cubes in SSIS is pretty simple if all you want to do is pick a partition and process it but if that partition is time based, which is quite likely then the partition you process each day, week or month will change. So what are your options?

First you can change your package each month, or use a variable with the partition name. Probably not the best of options as someone is going to have to physically make the change each month or come up with some clever method of changing your variable using something like VBA, Excel, etc. Inefficient if you ask me and defeating the object. Couple this with creating a years’ worth or partitions or creating them every time a new one is required and the whole task becomes quite laborious.

So what else can be done? My personal favourite is to use dynamic XMLA but this has a few of its own pitfalls you have to be aware of. First let’s look at the XMLA script for creating a partition and some of the key tags you have to be aware of.

<Create

xmlns="http://schemas.microsoft.com/analysisservices/2003/engine">

<ParentObject>

<DatabaseID>Adventure Works DW</DatabaseID>

<CubeID>Adventure Works DW</CubeID>

<MeasureGroupID>Fact Internet Sales 1</MeasureGroupID>

</ParentObject>

<ObjectDefinition>

<Partition

xmlns:xsd="http://www.w3.org/2001/XMLSchema"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<ID>Internet_Sales_2001</ID>

<Name>Internet_Sales_2001</Name>

<Annotations>

<Annotation>

<Name>AggregationPercent</Name>

<Value>20</Value>

</Annotation>

</Annotations>

<Source

xsi:type="QueryBinding">

<DataSourceID>Adventure Works DW</DataSourceID>

<QueryDefinition>SELECT * FROM [dbo].[FactInternetSales]

WHERE OrderDateKey <= '184'</QueryDefinition>

</Source>

<StorageMode>Molap</StorageMode>

<ProcessingMode>Regular</ProcessingMode>

<Slice>[Date].[Calendar].[July 2006]</Slice>

<ProactiveCaching>

<SilenceInterval>-PT1S</SilenceInterval>

<Latency>-PT1S</Latency>

<SilenceOverrideInterval>-PT1S</SilenceOverrideInterval>

<ForceRebuildInterval>-PT1S</ForceRebuildInterval>

<AggregationStorage>MolapOnly</AggregationStorage>

<Source

xsi:type="ProactiveCachingInheritedBinding">

<NotificationTechnique>Server</NotificationTechnique>

</Source>

</ProactiveCaching>

<EstimatedRows>1013</EstimatedRows>

<AggregationDesignID>AggregationDesign</AggregationDesignID>

</Partition>

</ObjectDefinition>

</Create>

Above is the create script of one of the partitions from the Adventure Works cube. There are some obvious elements in there but the ones that you need to be aware of when building your script dynamically are as follows;

ID – The most important one. Even though this is the same as the name of the partition there’s a couple of things to remember. Firstly they don’t have to be the same, secondly by default it’s named after the partition name. Why is this important? When you first create a measure group in your Analysis Services project (in Visual Studio) the default partition is named after the measure group name and subsequently the ID. The ID is the unique identifier of a partition within a measure group so if your partitions are named MeasureGroup1_200608, MeasureGroup1_200609 you may find the ID’s are MeasureGroup and MeasureGroup_200609 and processing a partition in XMLA is based on the partition ID.

The partition ID is something you can’t change in an Analysis Services project, well I haven’t found a way yet so in my little world it can’t be changed..! The first thing I would do is create a duplicate partition in the cube design and name it what I would like the ID to be. Once created, you can change the name to whatever you like, the ID won’t be changed.

The Name element becomes pretty arbitrary but you still need to ensure that every partition you create has a different name, again if you want it to be the same as the ID then name it accordingly.

The final two are the QueryDefinition and Slice. This is where you may have to step back and think about the overall design of cubes and their dimension structures. For the purpose of this post I’m talking about partitions being sliced by date, this is because in my experience that’s how most cubes will get sliced. Where it’s suitable I would try to make the key of the date dimension a meaningful value i.e. 20060926 = 26th September 2006. Before we go into multiple discussions about why this is a good or bad idea stop, it’s something I do, I know its pros and cons but I’ve found it to be beneficial in a hell of a lot of occasions, this example being one of them.

My QueryDefinition value will be the query that forms the dataset my partition is to be processed from. Generally you’ll see something with a where clause like “WHERE DateKey BETWEEN 20060900 AND 2006999” if the partition is query bound. If the partition is table bound, more than likely you’re using views to distinguish the different data sets. Finally the partition slice. Even though this is documented as being for information purposes only there have been some other well documented grey areas when not populating it. My recommendation is to put a value in anyway, even if it is for information purposes. The slice value for hmmmm let’s say the September 2006 partition in my cube would look something like this “<Slice>[Date].[Calendar].[September 2006]</Slice>“

or ideally “<Slice>[Date].[Calendar].&[200609]</Slice>“. The key for the slice makes it clear how you need to be considering this kind of thing when designing your dimensions at the data mart as well as OLAP stage as we’re making the month level of the dimension a critical value.

Looking at those key elements of the XMLA statement for creating a partition we get something like this;

<ID>200609</ID>

<Name>Measure_Group_Name_200609</Name>

<QueryDefinition>

SELECT *

FROM FactTable

WHERE DateKey BETWEEN 20060900 AND 20060999

</QueryDefinition>

<Slice>[Date].[Calendar].&[200609]</Slice>

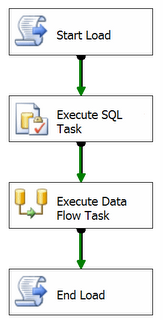

Each of the elements has the common value of “200609” which can be replaced allowing partitions to be created before processing, in this is example at month end. To actually execute this code within a SSIS package I populate the Source expression of an Analysis Services Execute DDL task. This can be either done via direct input or variable (selected by the SourceType property). The expression takes the variable, or direct input XMLA, and evaluates it based on any parameters you need to input. The parameters in this case would be replacing any reference with 200609 to correctly create the new partition.

Couple of things to remember when creating expression of XMLA like this, the script will have to be in double quotes and any double quotes within the script will have to be replaced with ‘\”’. That’s forward slash and double quote.

Finally, before you run the XMLA it would really be good practice to know if the partition already exists before you attempt to create it. AMO (Analysis Management Objects) is the answer to that. The script below, kindly mixed, matched and cribbed from the those lovely Project REAL chaps, will populate a SSIS boolean variable with the answer to whether the partition exists or not. Don’t forget to add AMO as a reference in the SSIS script task.

Imports System

Imports System.Data

Imports System.Math

Imports Microsoft.SqlServer.Dts.Runtime

Imports Microsoft.AnalysisServices

Public

Class ScriptMain

Public

Sub Main()

Dim WellIsItThere As

Boolean

Dim ASConnection As ConnectionManager

Dim ASServer As

String

Dim ASDatabase As

String

Dim ASCube As

String

Dim ASMeasureGrp As

String

Dim ASPartition As

String

ASPartition = Now().ToString("yyyyMM") ' This will give us “200609”

ASConnection = Dts.Connections("AnalysisServer") ' This is the name of the connection manager that you're going to connect to in the package

ASServer = CStr(ASConnection.Properties("ServerName").GetValue(ASConnection))

ASDatabase = CStr(ASConnection.Properties("InitialCatalog").GetValue(ASConnection))

ASCube = "Adventure Works"

' How you populate this is really up to you

ASMeasureGrp = "Internet Sales"

' And again the population of this is up to you

WellIsItThere = IsPartitionThere(ASServer, ASDatabase, ASCube, ASMeasureGrp, ASPartition)

Dts.Variables("PartitionExists").Value = WellIsItThere

Dts.TaskResult = Dts.Results.Success

End

Sub

Public

Function IsPartitionThere(ByVal sServer As

String, _

ByVal sDatabase As

String, ByVal sCube As

String, _

ByVal sMeasureGroup As

String, _

ByVal sPartition As

String) As

Boolean

' By default, we will assume that it isn't there

' Only if we get all of the way to the end and everything is

' found, will we set it true

Dim bIsPartitionThere As

Boolean = False

Dim oServer As

New Microsoft.AnalysisServices.Server

' connect to the server and start scanning down the

' object hierarchy

oServer.Connect(sServer)

Dim oDB As Database = oServer.Databases.FindByName(sDatabase)

If oDB Is

Nothing

Then

MsgBox("Did not find expected database: " & sDatabase, _

MsgBoxStyle.OkOnly, "Error looking for partition")

GoTo Done

Else

Dim oCube As Cube = oDB.Cubes.FindByName(sCube)

If oCube Is

Nothing

Then

MsgBox("Did not find expected cube: " & sCube, _

MsgBoxStyle.OkOnly, "Error looking for partition")

GoTo Done

Else

Dim oMG As MeasureGroup = _

oCube.MeasureGroups.FindByName(sMeasureGroup)

If oMG Is

Nothing

Then

MsgBox("Did not find expected Measure Group: " & _

sMeasureGroup, _

MsgBoxStyle.OkOnly, _

"Error looking for partition")

GoTo Done

Else

'-- This is the real test -- to see if the partition

' is really there

Dim oPart As Partition = _

oMG.Partitions.Find(sPartition)

If

Not oPart Is

Nothing

Then

' This is the only place to set the value to TRUE

bIsPartitionThere = True

End

If

End

If

End

If

End

If

Done:

oServer.Disconnect() ' disconnect from the server -- we are done

NoConnection:

Return bIsPartitionThere

End

Function

End

Class